Anthropic brings its safety-first AI chatbot Claude to Canada

Posted Jun 5, 2024 12:00:11 PM.

Last Updated Jun 5, 2024 12:01:09 PM.

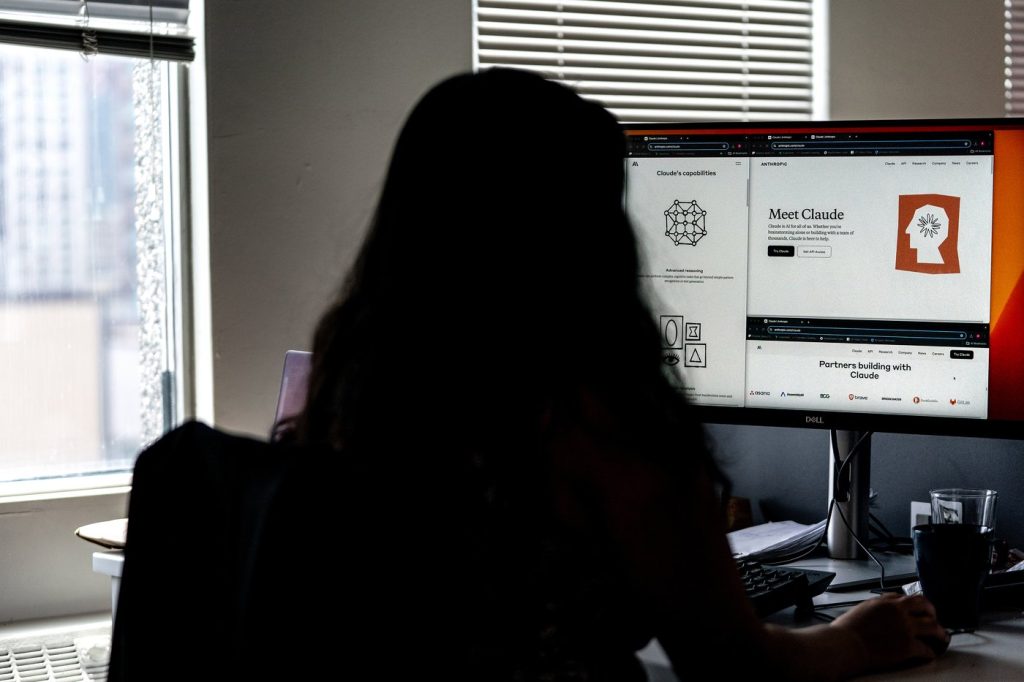

One of the buzziest artificial intelligence chatbots in the global tech sector has arrived in Canada with the hope of spreading its safety-first ethos.

Claude, which can answer questions, summarize materials, draft text and even code, made its Canadian debut Wednesday.

The technology launched by San Francisco-based startup Anthropic in 2023 was available in more than 100 other countries before Canada.

It’s crossing the border now because the company has seen signs that Canadians are keen to dabble with AI, said Jack Clark, one of the company’s co-founders and its head of policy.

“We have a huge amount of interest from Canadians in this technology, and we’ve been building out our product and also compliance organizations, so we’re in a position to operate in other regions,” he said. (The company made its privacy policy clearer and easier to understand ahead of the Canadian launch.)

Claude’s Canadian debut comes as a race to embrace AI has materialized around the world, with tech companies rushing to offer more products capitalizing on large language models — the expansive and data-intensive underpinnings on which AI systems are built.

While Canada has had access to many of the biggest AI products, some chatbots have been slower to enter the country.

Google, for example, only brought its Gemini chatbot to Canada in February because it was negotiating with the federal government around legislation requiring it to compensate Canadian media companies for content posted on or repurposed by its platforms.

Despite the delays, Canadians have dabbled with many AI systems including Microsoft’s Copilot and OpenAI’s ChatGPT, which triggered the recent AI frenzy with its November 2022 release.

Anthropic’s founders met at OpenAI, but splintered into their own company before the chatbot’s debut and quickly developed a mission to make their offering, Claude, as safe as possible.

“We’ve always thought of safety as something which for many years was seen as an add-on or a kind of side quest for AI,” Clark said.

“But our bet at Anthropic is if we make it the core of the product, it creates both a more useful and valuable product for people and also a safer one.”

As part of that mission, Anthropic does not train its models on user prompts or data by default. Rather, it uses publicly available information from the internet, datasets licensed from third-party businesses and data that users provide.

It also relies on Constitutional AI, a set of values given to AI systems to train on and operate around, so they are less harmful and more helpful.

At Anthropic, those values include the Universal Declaration on Human Rights, which highlights fair treatment for people regardless of their age, sex, religion and colour.

Anthropic’s rivals are taking note.

“Whenever we gain customers — and it’s partly because of safety — other companies pay a lot of attention to that and end up developing similar things, which I think is just a good incentive for everyone in the industry,” Clark said.

He expects the pattern to continue.

“Our general view is that safety for AI will kind of be like seatbelts for cars and that if you figure out simple enough, good enough technologies, everyone will eventually adopt them because they’re just good ideas.”

Anthropic’s dedication to safety comes as many countries are still in the early stages of shaping policies that could regulate how AI can be used and minimize the technology’s potential harms.

Canada tabled an AI-centric bill in 2022, but it won’t be implemented until at least 2025, so the country has resorted to a voluntary code of conduct in the meantime.

The code asks signatories like Cohere, OpenText Corp. and BlackBerry Ltd. to monitor AI systems for risks and test them for biases before releasing them.

Asked whether Anthropic would sign Canada’s code, Clark wouldn’t commit. Instead, he said the company was focused on global or at least multi-country efforts like the Hiroshima AI Process, which G7 countries used to produce a framework meant to promote safe, secure and trustworthy AI.

This report by The Canadian Press was first published June 5, 2024.

Tara Deschamps, The Canadian Press